The solution to AI hallucinations is more data for the user

Apple had to delay the contextual, more personal Apple Intelligence and Siri into late 2025 or early 2026 due to accuracy issues. The feature can't afford to be wrong, because if users don't trust it and have to check its work, then there's no point.

I covered a snippet Mark Gurman shared in his Power On newsletter this morning that seemed interesting. He suggests Apple is delaying the Home Hub (the iPad/HomePod Siri hybrid tablet device) into 2026 – after the contextual AI/Siri upgrade goes live.

That got me thinking. Why delay a product whose first iteration won't even support Apple Intelligence? Even Gurman says the Home Hub with Apple Intelligence won't launch until we get the Luxo Lamp AI-powered robotic arm too.

My guess is optics. Apple could release the Home Hub tomorrow without any real issue. It would listen to commands, show information, and do all of the things, sans Apple Intelligence, and work fine.

However, Siri is in bad shape in the public eye. While I may still be a Siri unicorn that has no real issues with Apple's smart assistant, many see it as a failure. The Home Hub's reputation will be tied to Siri's, whether Apple likes it or not.

So, Apple needs a win. Okay, so that explains why a delay needs to happen with the Home Hub hardware, but I'm not convinced we need to wait that long.

AI is inherently dumb and broken, so treat it that way

There's really not much we can do about artificial intelligence and its tendency to confidently share lies. That's more of a feature than a bug, sadly.

However, I've got a novel idea I'd be happy if Apple stole from me: show your work.

If the whole point of this new contextually aware, more personal Apple Intelligence and Siri is to parse all of your on-device data to provide output, then surface that data in the result. Sure, it's checking your work, but streamlined.

There's likely never going to be a situation where artificial intelligence can operate with zero chance of hallucination. So, instead of making customers worry if this is the one that's wrong, give them the data in the answer.

The "AI" part of it should still give you a voice response that summarizes what it found. That's the part that can be wrong some of the time, but it should serve as an overview, not gospel.

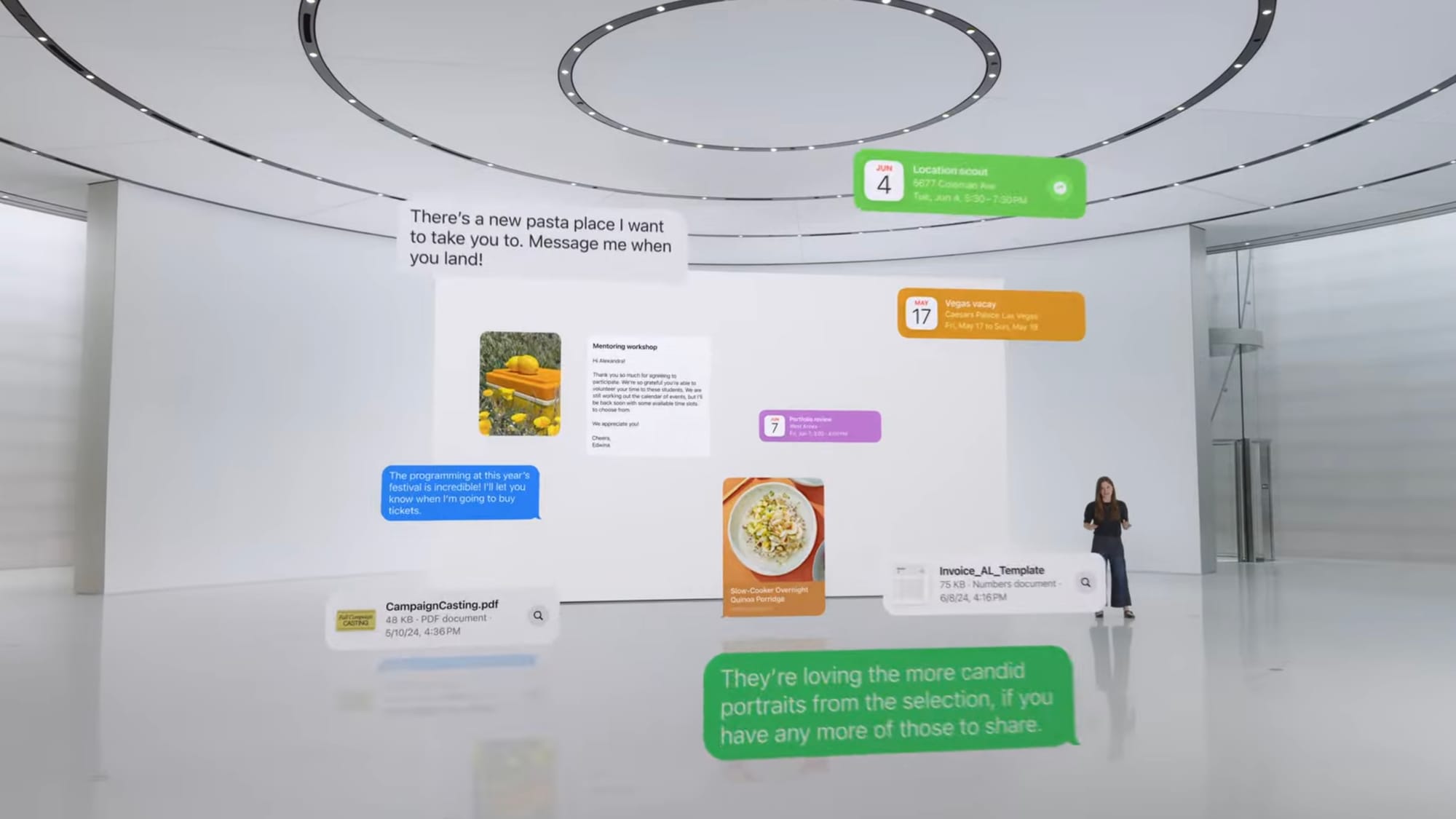

Then, attached to that response, there should be a kind of interactive bento with snippets of data that the generated response pulled from. Using Apple's mother on a plane situation, the bento would show: the part of the iMessage that discussed getting an Uber after the flight lands, the ticket in Mail, the Calendar data of the flight, weather conditions, and a link out to a preconfigured Uber order for after the flight lands.

Apple's ad example would show a bento with the calendar event, photos, a contact card, and iMessage data.

Make the information glanceable and actionable. That's all doable today and already kind of exists. Apple showed that the Siri results include links back to where it found information during the WWDC demo. Just make that more prominent and show more data points when available and remind people to check for accuracy issues.

I don't think this feature needs to wait a year. It's never going to be 100% correct. So ship it with good, actionable UI that empowers the user, or don't ship it. I don't want to wait around for a Home Hub product that could have launched five years ago.